Nonlinear control

Nonlinear control is the area of control engineering specifically involved with systems that are nonlinear, time-variant, or both. Many well-established analysis and design techniques exist for LTI systems (e.g., root-locus, Bode plot, Nyquist criterion, state-feedback, pole placement); however, one or both of the controller and the system under control in a general control system may not be an LTI system, and so these methods cannot necessarily be applied directly. Nonlinear control theory studies how to apply existing linear methods to these more general control systems. Additionally, it provides novel control methods that cannot be analyzed using LTI system theory. Even when LTI system theory can be used for the analysis and design of a controller, a nonlinear controller can have attractive characteristics (e.g., simpler implementation, increased speed, or decreased control energy); however, nonlinear control theory usually requires more rigorous mathematical analysis to justify its conclusions.

Contents |

Properties of nonlinear systems

Some properties of nonlinear dynamic systems are

- They do not follow the principle of superposition (linearity and homogeneity).

- They may have multiple isolated equilibrium points.

- They may exhibit properties such as limit-cycle, bifurcation, chaos.

- Finite escape time: Solutions of nonlinear systems may not exist for all times.

Analysis and control of nonlinear systems

There are several well-developed techniques for analyzing nonlinear feedback systems:

- Describing function method

- Phase plane method

- Lyapunov stability analysis

- Singular perturbation method

- Popov criterion (described in The Lur'e Problem below)

- Center manifold theorem

- Small-gain theorem

- Passivity analysis

Control design techniques for nonlinear systems also exist. These can be subdivided into techniques which attempt to treat the system as a linear system in a limited range of operation and use (well-known) linear design techniques for each region:

Those that attempt to introduce auxiliary nonlinear feedback in such a way that the system can be treated as linear for purposes of control design:

And Lyapunov based methods:

- Lyapunov redesign

- Nonlinear damping

- Backstepping

- Sliding mode control

Nonlinear feedback analysis – The Lur'e problem

An early nonlinear feedback system analysis problem was formulated by A. I. Lur'e. Control systems described by the Lur'e problem have a forward path that is linear and time-invariant, and a feedback path that contains a memory-less, possibly time-varying, static nonlinearity.

The linear part can be characterized by four matrices (A,B,C,D), while the nonlinear part is Φ(y) with ![\frac{\Phi(y)}{y} \in [a,b],\quad a<b \quad \forall y](/2012-wikipedia_en_all_nopic_01_2012/I/23793364ddb9c9880c2cf64a4ad7cf4f.png) (a sector nonlinearity).

(a sector nonlinearity).

Absolute stability problem

Consider:

- (A,B) is controllable and (C,A) is observable

- two real numbers a, b with a<b, defining a sector for function Φ

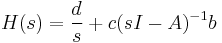

The problem is to derive conditions involving only the transfer matrix H(s) and {a,b} such that x=0 is a globally uniformly asymptotically stable equilibrium of the system. This is known as the Lur'e problem.

There are two main theorems concerning the problem:

- The Circle criterion

- The Popov criterion.

Popov criterion

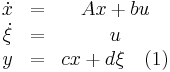

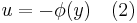

The sub-class of Lur'e systems studied by Popov is described by:

where x ∈ Rn, ξ,u,y are scalars and A,b,c,d have commensurate dimensions. The nonlinear element Φ: R → R is a time-invariant nonlinearity belonging to open sector (0, ∞). This means that

- Φ(0) = 0, y Φ(y) > 0, ∀ y ≠ 0;

The transfer function from u to y is given by

Theorem: Consider the system (1)-(2) and suppose

- A is Hurwitz

- (A,b) is controllable

- (A,c) is observable

- d>0 and

- Φ ∈ (0,∞)

then the system is globally asymptotically stable if there exists a number r>0 such that

infω ∈ R Re[(1+jωr)h(jω)] > 0 .

Things to be noted:

- The Popov criterion is applicable only to autonomous systems

- The system studied by Popov has a pole at the origin and there is no direct pass-through from input to output

- The nonlinearity Φ must satisfy an open sector condition

Theoretical results in nonlinear control

Frobenius Theorem

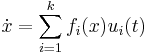

The Frobenius theorem is a deep result in Differential Geometry. When applied to Nonlinear Control, it says the following: Given a system of the form

where  ,

,  are vector fields belonging to a distribution

are vector fields belonging to a distribution  and

and  are control functions, the integral curves of

are control functions, the integral curves of  are restricted to a manifold of dimension

are restricted to a manifold of dimension  if span(

if span( and

and  is an involutive distribution.

is an involutive distribution.

Further reading

- A. I. Lur'e and V. N. Postnikov, "On the theory of stability of control systems," Applied mathematics and mechanics, 8(3), 1944, (in Russian).

- M. Vidyasagar, Nonlinear Systems Analysis, 2nd edition, Prentice Hall, Englewood Cliffs, New Jersey 07632.

- A. Isidori, Nonlinear Control Systems, 3rd edition, Springer Verlag, London, 1995.

- H. K. Khalil, Nonlinear Systems, 3rd edition, Prentice Hall, Upper Saddle River, New Jersey, 2002. ISBN 0130673897

- B. Brogliato, R. Lozano, B. Maschke, O. Egeland, "Dissipative Systems Analysis and Control", Springer Verlag, London, 2nd edition, 2007.